I recently had a need for a for a basic Kubernetes (k8s) Cluster which I also needed to have running locally in my vSphere Home Lab for testing purposes. I know there are a number of great blog articles out there that shows you how to setup your own k8s from scratch, including a recent blog series from Myles Gray. However, I was looking for something quick that I could consume without requiring any setup. To be honest, installing your own k8s from scratch is so 2017 😉

If you ask most people, they simply just want to consume k8s as an integrated solution that just works and not have to worry about installing and managing the underlying components that make up k8s. VMware PKS and PKS Cloud are two great examples of this where Pivotal and VMware provides a comprehensive solution (including Software Defined Networking) for managing the complete lifecycle (Day 0 to Day N) for running Enterprise K8s, whether that is within your own datacenter or running as a public cloud service. For my exploratory use case, PKS was overkill and I also did not have the required infrastructure setup in this particular environment, so I had to rule that out for now.

While searching online, I accidentally stumbled onto a recent VMware Open Source project called sk8s, short for Simple Kubernetes (k8s) which looked really interesting. At first glance, a few things stood out to me immediately. This project was created by none other than Andrew Kutz, for those not familiar with Andrew's work, he famously created the Storage vMotion UI plugin for the vSphere C# Client before VMware had native UI for the feature. He was also the creator of the first vCenter Simulator back in the day called simDK that was also widely used by a number of customers including myself. I knew Andrew had joined our Cloud Native Business Unit (CNABU), but I was not sure what he was up to these days, guess I now know 🙂 and is helping both VMware and the OSS community in k8s development.

Although the primary consumer for sk8s is for developers of k8s, which is called out in the README:

- For developers building and testing Kubernetes and core Kubernetes components

- Capable of deploying any version of Kubernetes (+1.10) on generic Linux distributions

- Designed to deploy single-node, multi-node, and even multi-control plane node clusters

- Able to deploy nodes on DHCP networks with support for both node FQDNs and IPv4 addresses

- A single, POSIX-compliant shell script, making it portable and customizable

I also found that it could serve another purpose for non-production k8s testing. The biggest thing for me was that sk8s is provided as an OVA which can easily deploy a number of configurations by simply cloning itself. This means I can have something up and running in minutes which fits my bill perfectly. Below is the detailed instructions on getting sk8s to run locally in your vSphere environment, I had ran into a couple of issues so I figure it was worth sharing the step by step instructions and providing a bit more detail on what is actually happening.

Prerequisites:

- vCenter Server running 6.5 or 6.7

- DHCP enabled network w/internet accessibility

- govc

Limitations:

- sk8s has an issue currently that if you have multiple vSphere Datacenters in your vCenter Server, it will not be able to proceed with the cloning operation due to the way it performs the source VM lookup. A workaround is to create a user with limited access to the desired vSphere Datacenter or simply have a single vSphere Datacenter.

Step 1 - Install govc on your local desktop which has access to your vSphere environment. If you have not installed govc, the quickest way is to simply download the latest binary, below is an example of installing the latest MacOS version:

curl -L https://github.com/vmware/govmomi/releases/download/v0.20.0/govc_darwin_amd64.gz | gunzip > /usr/local/bin/govc

chmod +x /usr/local/bin/govc

Step 2 - Deploy the sk8-photon.ova appliance using the vSphere UI. You can either download the OVA to your local desktop and then deploy or you can simply deploy by using the URL: https://s3-us-west-2.amazonaws.com/cnx.vmware/cicd/sk8-photon.ova

I personally recommend importing the OVA into a vSphere Content Library, this way you can easily deploy additional instances which are independent of each other for testing purposes.

During the network configuration, make sure to change the IP Allocation to DHCP

Step 3 - Next, we specify the version of k8s and the number of nodes. In this example, we will default to the latest version of k8s, but you can follow the github URL if you wish to deploy a specific version. The really slick thing about sk8s is that you can define the number of control plane and worker nodes as shown in the screenshot below and sk8s will automatically clone itself and configure the k8s nodes to match the desired outcome. In this example, I will deploy 3 nodes, 2 control plane with a shared worker node.

Step 4 - In the next section you can define the amount of CPU/Memory resources used for the control plane and worker nodes. You will also need to provide your vCenter Server credentials which will be used within the sk8s VM to perform the actual clone operation.

Step 5 - Once the sk8s OVA has been deployed, the last step is to power on the VM and watch it do its thing. If everything was configured correctly, you should see additional VMs cloned from the source sk8s appliance. It will follow the same naming convention as the base sk8s and it will append -c[NN] for the Control Plane function or -w[NN] Worker VMs as shown in the screenshot below. The initial VM is a Control Plane VM but it does not get renamed to ensure it can find itself for the clone operation.

Note: If cloning does not happen within 30 seconds or so, there may be an issue. To troubleshoot further, login to the console of the VM (root/changeme) and take a look at /var/log/sk8/vsphere.log which contains more information about deployment.

As mentioned, the actual clone operation should be fairly quick, but it can take up to several minutes to download the required binaries for setting up k8s cluster. You can monitor the progress by logging into the console sk8s VM (SSH is disabled by default) and you can tail /var/log/sk8/sk8.log to see the detailed progress.

Step 6 - Once the cloning operation has completed, the Notes/Annotations for all VMs will automatically be updated with unique instructions on how to access the particular k8s cluster deployment. This is in the form of a cURL command which talks to sk8 service and returns information about the cluster and how to connect. It does this by using the govc CLI, which is why we need to have it installed locally on your desktop.

Before we run the cURL command, we need to define a few govc variables so that it can talk to our vCenter Server, to do so run the following commands and replace it with your own values:

export GOVC_URL=https://192.168.30.200/sdk

export GOVC_USERNAME=*protected email*

export GOVC_PASSWORD=VMware1!

export GOVC_INSECURE=True

In my setup, I have the following in my VM Notes field: curl -sSL http://bit.ly/sk8-local | sh -s -- 42361763-43f3-cd0c-f21a-1a3a0ecc9736

Simply run the command and it will contact all sk8s nodes to run a health check and then generate SSH keys that can then be used to access each node if required. If it hangs at the "generating cluster access", most likely still downloading the binaries. You can refer to the step above to check its progress and then re-run the command. At the very end, you will be provided with an export command to run which creates multiple aliases for ssh, scp, kubectl and a turn down script which will delete all the VMs that is specific for this k8s deployment. The nice thing about this is that you can deploy any number of sk8s in any configurations and be able to access them without having to constantly switch your kubeconfig which would be required if these aliases did not exists.

After running the export command, instead of using the traditional kubectl command to connect to k8s cluster, you will use the specific alias as noted in the output. In my example, it is kubectl-68f6132

Step 7 - We can confirm that everything was setup correctly by checking the kube-system namespace and ensure that the two management pods are running without issues. To do so, run the following command and replace the kubectl command alias with the one in your setup:

kubectl-68f6132 -n kube-system get pods

At this point, you now have a fully functional k8s cluster that you can start deploying workloads. Pretty cool huh!? If you want to try out a sample k8s application, continue to the next step.

Step 8 (Optional) - Run the following command which will download a sample Enterprise application built by no other than Microsoft Paint Master Massimo Re Ferre'

wget https://raw.githubusercontent.com/lamw/vmware-pks-app-demo/master/yelb.yaml

kubectl-68f6132 create namespace yelb

kubectl-68f6132 apply -f yelb.yaml

watch -n 1 kubectl-68f6132 -n yelb get pods

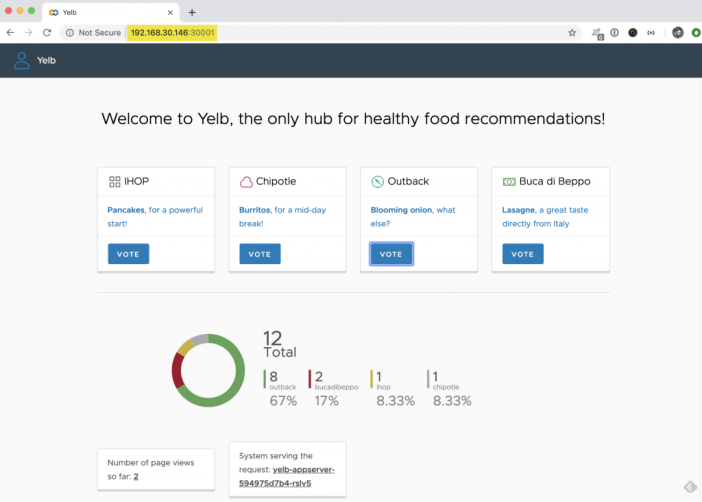

Once all the pods are up and running, to access the application, we need to find out the IP Address to the yelb-ui pod. The above command will give you the id and then you can run the following command to get the specific k8s node which will then include the IP Address as shown in the screenshot below.

kubectl-68f6132 -n yelb describe pod yelb-ui-69c7745b49-8q2zb

Once you have the IP Address, open a browser and specify the IP and port 30001 and you should be able to now access the application

If you have any feedback or enhancements regarding sk8s, you can check out the sk8s github page for more information. Something that you may or may not have noticed is that sk8s can also run on VMware Cloud on AWS (VMC), in fact, it is even designed to enable automatic provisioning of an AWS Elastic Load Balancer given your AWS credentials and will associate that with your given k8s deployment. This makes it very simple to deploy k8s in VMC for development purposes and not have to expose the underlying VMs to the public internet. This will be a topic that I will cover in a separate article for anyone who might be interested in running k8s on VMC.

If you're already running Linux you'll be much better served by microk8s, available as a snap

This seems like alot of work still.. Have you looked at Rancher? It uses RKE which can deploy a cluster to esxi automatically

Not sure what you define as "a lot of work", but simply importing an OVA and then running a command to set some variables to access k8s is pretty darn quick to me ... I've not looked at Rancher but I'm also not looking in install anything else on top or else there's number of solutions in this space.

For some reason, I don't see the kubectl binary created in my environment. The other three commands are there.

looked at the script and realized I need to install kubectl to my Mac

I am seeing this issue when the clone completes and tries to power on the vm's:

Property 'Password' must be configured for the VM to power on.

Any thoughts?

kubectl is not in the bin directory..using Centos7.6

Ran:

cat < /etc/yum.repos.d/kubernetes.repo

[kubernetes]

name=Kubernetes

baseurl=https://packages.cloud.google.com/yum/repos/kubernetes-el7-x86_64

enabled=1

gpgcheck=1

repo_gpgcheck=1

gpgkey=https://packages.cloud.google.com/yum/doc/yum-key.gpg https://packages.cloud.google.com/yum/doc/rpm-package-key.gpg

EOF

yum install -y kubectl

Then reran: curl -sSL http://bit.ly/sk8-local | sh -s -- ********** identifier and kubectl was there.

[root@centos764bit ba4ceee]# kubectl-ba4ceee -n kube-system get pods

The connection to the server 192.168.1.138:443 was refused - did you specify the right host or port?

https://192.168.1.138:443 turns up nothing...seems the port is not working for some reason.

same..

I do not see /var/log/sk8/vsphere.log file. Clone operation is not starting either.

Vcenter 6.7. One Datacenter, one esx.

Any ideas on why i might get the following error after running the curl command against the k8s cluster after deployment?

sh: 24: set: Illegal option -o pipefail

type bash instead of sh

Unfortunately on the OVA import I get "Line 33: Unsupported element 'IpAssignmentSection'" no matter what dns name/IP I put in. This is a great idea if it works but I also hear the consensus to simply use a mac or linux machine and run microk8s.

I really liked the idea of running sk8 on vsphere, so I tried it and it seem to be broken due to this issue: https://github.com/vmware/simple-k8s-test-env/issues/22 (at least since oct 2, 2019)

any idea how to overcome this issue or bypass it? I only can think of somehow change root password expiration and repackage ovf and redeploy. Or is there way to kick off install process again after it failed?

Let me ping the Andrew (author of sk8) to see if its something he can look into.

The latest response there is that this won't be fixed. Sadly, this means the entire project no longer works. It might be worth editing the article above to note this.

Unable to retrieve manifest or certificate file.

Is there a tar I can download?

forget that last question, been long day. 🙂