The topic of multi-homing the vCenter Server Appliance (VCSA) in which additional virtual network cards (vNICs) are added has been some what of an active topic of discussion lately, at least internally. By no means is this a new topic, in fact if you do a search online, you will find several articles on how to configure additional vNICs for older releases of the VCSA. Some of the common use cases for requiring this capability for the VCSA or any other infrastructure VM for that matter range from having a dedicated backup network, 3rd party monitoring and accessing the management stack through a bastion jump host which resides on the public side of a DMZ environment.

UPDATE (04/20/20) - In vSphere 7, multi-homing of the VCSA is now officially supported. You can have up to 7 additional network adapters attached to the VCSA which will be preserved upon upgrades and backup. The networks MUST be different from the default management network on the VCSA. Lastly, if you intend to use vCenter High Availability (VCHA), you should NOT use eth1 as that is the default interface that will be selected when you enable VCHA.

Disclaimer: Although it is possible to add additional vNICs to the VCSA, as far as I have been told, this is currently not officially supported by VMware. Please use at your own risk.

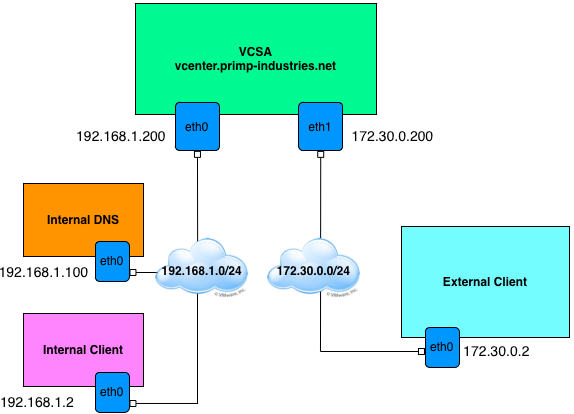

If you are considering multi-homing your VCSA 6.x (applies to vCenter Server 6.x for Windows), there are a few things to be aware of from earlier versions of vSphere. Before jumping into the details, lets take a look at an example deployment of the VCSA which has 2 vNICs and that is connected to two different networks. The first network is 192.168.1.0/24 which is connected to the first interface (eth0) of the VCSA. The VCSA's primary network identifier (PNID) is using the FQDN of the VCSA which is vcenter.primp-industries.net. We also have a DNS server and an internal Windows desktop client residing on this network which we will refer to as Network A. We then have a second network 172.30.0.0/24 which we will refer to as Network B which is connected to the second interface (eth1) of the VCSA. There is also a Windows desktop client residing on Network B.

Accessing the vSphere Web Client:

For the internal desktop client, I can point my browser to either the FQDN (vcenter.primp-industries.net) or the IP Address (192.168.1.200) and I can connect to the vSphere Web Client without any issues. The issue which some of you may have found in vSphere 6.x is that if you try to connect to the second IP Address (172.30.0.200) of the VCSA from the external desktop client, you will notice that a redirect occurs when the vSphere Web Client loads and you are taken to the SSO endpoint which fails to resolve as seen in the screenshot below.

In my example, I have an Embedded VCSA, but if you had an External Platform Services Controller (PSC), you would see that the SSO URL would be that of your PSC. This happens because the PSC binds to the PNID which can either be an FQDN or IP Address. To workaround this limitation, you will need to either manually add a hosts entry on the external desktop client mapping the second IP Address to the FQDN of the VCSA or configure your DNS server to also map the IP Address to the FQDN. In my lab, I decided to just update the hosts entry on my Windows client like the following:

172.30.0.200 vcenter.primp-industries.net

Once the change have been made, you simply just need to refresh the browser and as you can see from the screenshot below, I am now logged into the vSphere Web Client using the secondary IP Address of the VCSA. This is also another reason to always use an FQDN vs. IP Address which I discuss in more detail in the next section.

Note: If you just want to access the vCenter Server using API or vSphere C# Client, you will not have to perform the tweak as neither of those interfaces require going through SSO. If you plan to use the vCloud Suite SDKs which do require SSO, then the tweaks mentioned above will be required.

FQDN vs a IP Address for PNID:

As you can see from the previous issue, if we had selected an IP Address instead of using the FQDN for the PNID, we would not have been able to access the vSphere Web Client even with our tweaks. The reason for this is that the PNID is now tied to the IP Address and you would not be able to resolve that address from the external desktop client. In addition to this issue, if you chose IP Address instead of FQDN, you will run into another problem with the default self-signed SSL Certificates which are bounded by either the FQDN or IP Address. If you do not plan to use your own certificates which you could add additional entries, then make sure to always use an FQDN.

Same network for both vNICs:

Make sure that you are using two completely different networks (e.g. different VLANs & different gateways) else you will run into problems with Linux IP reverse path filtering. If you really need to use the same network on both vNICs, then you will need to disable ip reverse path filtering. This is not a new issue and applies to all modern OSes as you can only have a single default gateway. If you do need to specify a second gateway for the additional vNIC, you have the option of either using a static route or creating a secondary routing table.

Configuring vNIC using new HTML5 VAMI:

Starting with VCSA 6.0 Update 1, there is now a new HTML5 based VAMI interface for managing and configuring the VCSA appliance. You can add a new vNIC to the VCSA while it is running using either the vSphere Web/C# Client which manages the VCSA. Once that operation has completed, you can login to the VAMI UI by opening a browser to https://[VCSA-HOSTNAME]:5480 and select the "Networking" tab on the left hand corner. You should see both vNICs, with the newly added vNIC in an un-configured state as shown in the screenshot below.

To configure the new vNIC, just click on the "Edit" button and specify either an IPv4 or IPv6 address. Make sure you do not add a gateway UNLESS you plan on changing the system default gateway. You can potentially negatively impact the VCSA's networking if you did not mean to change the default gateway of the system.

In addition to the VAMI UI, you can also configure additional vNICs from the command-line using the default appliancesh interface by running the following command:

networking.ipv4.set --interface nic1 --mode static --address 172.30.0.200 --prefix 24

or if you have defaulted to the bash shell, then you can run the following VAMI command:

/opt/vmware/share/vami/vami_set_network STATICV4 172.30.0.200 255.255.255.0 default

For those interested, I had used VMware Photon and the following Docker Container to quickly stand up a DNS Server. This might come in handy for anyone looking to try this out in their lab or just for general testing.