One of the announcements at VMworld this week is the upcoming release of vSphere 6.0 Update 1 (GA sometime in Q3 2015) and in addition to bug fixes there are also several new enhancements that have been added. Here are some of the new capabilities specifically for the vCenter Server Appliance (VCSA).

- New Deployment Targets - The VCSA now supports both vCenter Server (brownfield) as well as ESXi (greenfield) as a deployment targets.When using either the Guided UI or Scripted UI, you can now deploy to an existing vCenter Server which might serve as a management cluster for example. Previously, ESXi was the only supported deployment target.

- Convert Embedded VCSA to External PSC - An Embedded VCSA deployment can now be re-configured or re-pointed to an External PSC using the new "reconfigure" and "repoint" option found in the /bin/cmsso-util utility. This allows customers to quickly get started using the simple Embedded VCSA deployment and as they get more comfortable and want to scale out to an External PSC for features like Enhanced Linked Mode, you can easily do so.

Two of the most frequently asked questions that I have seen from customers since the release of the VCSA 6.0 is where did the VMware Appliance Management Interface (VAMI) and URL-based patching go? These were definitely two missed features that did not make it into VCSA 6.0 release and today I am pleased to announce that they have returned with some nice enhancements!

- VAMI UI - The VAMI UI can be accessed in the familiar 5480 port by visiting the following URL of the VCSA: https://[VCSA]:5480 and requires a local OS account to login like the root user account. The VAMI itself has been completely re-written both on the backend as well as the frontend which is now an HTML5 interface. All VAMI functionality can be accessed both from the UI as well as using the appliancesh command-line interface.

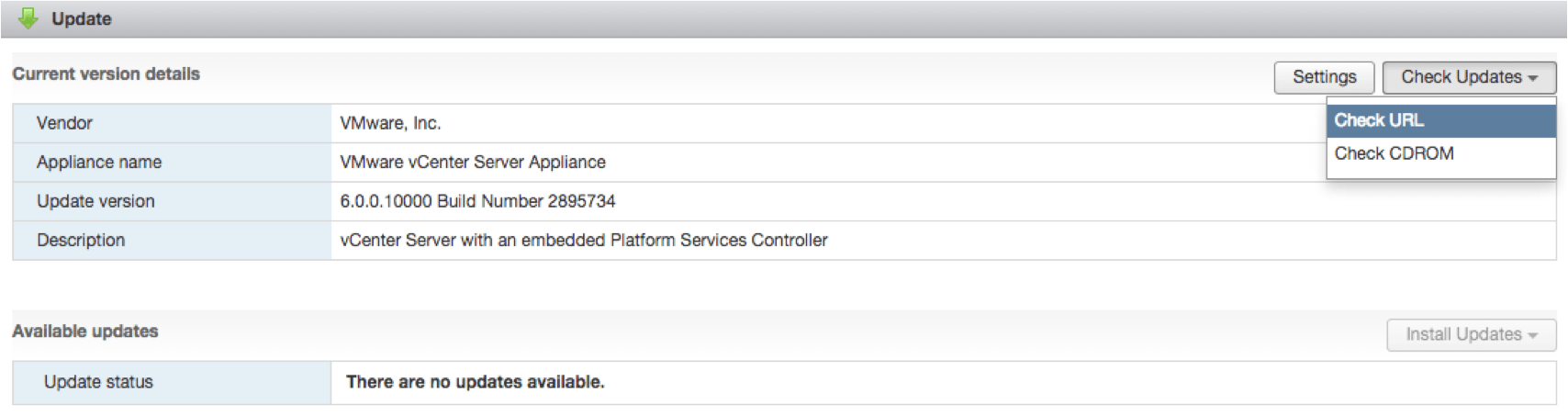

- URL-based patching - URL-based patching is also included in the new VAMI UI interface. By default it is configured to point back to VMware's online repository but you can also configure it to use an ISO or a custom repository as previous versions supported. All patching capabilities are also available using the appliancesh command-line interface.

- PSC UI - In addition to new VAMI UI, there also now a new Platform Services Controller (PSC) UI which is also written in HTML5. The new UI is located at the following URL: https://[VCSA]/psc and requires an SSO Administrator account to login. This new UI actually uses the same backend as the PSC configurations found within the vSphere Web Client. The idea behind this UI is to provide customers with a way to configure SSO and other related configurations within the PSC for either a greenfield setup or when the vSphere Web Client is unavailable. This can come in handy for troubleshooting purposes. Lastly, with the new PSC UI, you will now be able to replace certificates from a UI standpoint where as previously this was only available in the CLI.

- Build-2-Build upgrade support - In prior releases, both a "Major" and "U" (Update) release of the VCSA meant that you had to deploy the new VCSA to perform a migration based upgrade. In vSphere 6.0 Update 1, "U" releases (U1, U2, etc) can now be accomplished by an in-place upgrade or sometimes refer to as a build-2-build. There will be a VCSA 6.0 Update 1 ISO which can be mounted within your existing VCSA 6.0 appliance to perform the upgrade as seen in the screenshot below.

- appliancesh automation - The appliancesh interface in the VCSA 6.0 was primarily targeted for interactive usage and did not support any type of Automation. The feedback from customers was to provide a way to be able to call into the various appliancesh commands and in VCSA 6.0 Update 1, you can now execute a series of appliancesh commands within a file and re-directing that into an SSH session. VMware is also looking into providing a proper API for the appliancesh commands, if you have any feedback on this please leave a comment or reach out to Alan Renouf, who is the PM.

Below is the contents of the vcsa-commands.txt file which contains the following appliancesh commands to configure and enable NTP for the VCSA:

ntp.test --servers 0.pool.ntp.org,1.pool.ntp.org ntp.server.add --server 0.pool.ntp.org,1.pool.ntp.org timesync.set --mode NTP ntp.get

Lastly, though this is not specific to the VCSA, I thought it was also worth mentioning that you can now access ALL capabilities of vSphere Update Manager (VUM) within the vSphere Web Client. VUM will still require a separate Windows system, but will fully inter-operate with both the Windows VC as well as the VCSA and you no longer need to rely on the vSphere C# Client to perform remediation or base-line creation and assignments.

As you can see, there are a ton of enhancements in the latest vSphere 6.0 Update 1 release and if you have not taken vSphere 6.0 for a spin yet, I definitely recommend starting with this release.