I have had a few folks ask about Nested ESXi support on VMware Cloud on AWS (VMC), so lets get that out of the way first. Nested ESXi is NOT supported by VMware on any of our platforms, whether that is an on-premises or a cloud environment like VMC or any 3rd party vendors that maybe using VMware software. For those wanting an "official" statement on Nested ESXi support, you can refer to KB 2009916.

UPDATE (02/10/20) - Updated my Automated vSphere Lab Deployment Script to support "basic" Nested vSphere environment running on VMC.

Was asked if it was possible to setup a “basic” Nested vSphere environment for Automation/API testing running in #VMWonAWS

Just updated my Automated vSphere Deployment Lab Script https://t.co/50tJmcDcH2 to enable support for #VMC 😊 pic.twitter.com/n0SeNIaJm8

— William Lam (@lamw.bsky.social | @*protected email*) (@lamw) February 10, 2020

Now, we all know Nested ESXi works and it runs extremely well on vSphere. In fact, vSphere is the best platform for running any Hypervisor in a VM. This is also true for VMC, you can run a Nested ESXi VM in an SDDC, however there are some caveats compared to what you would experience in an on-prem environment. Below are some of the caveats to be aware of if you are considering running Nested ESXi on VMC.

1) Deploying Nested ESXi into VMC SDDC

Luckily, there are no caveats here. You can create a new Nested ESXi VM (following the same procedure you would for an on-prem environment) and then mount the ISO to manually install ESXi if you like. In my opinion, the easiest method to deploy Nested ESXi whether it is on VMC or on-prem is using my Nested ESXi Content Library, which also works in VMC. Simply add the Content Library (enable sync by default) and then you can deploy a number of pre-configured Nested ESXi VMs without even break a sweat!

2) Storage for Nested ESXi

The first caveat is related to the underlying storage that VMC uses which is VSAN. If you want to run Nested ESXi on top of a physical VSAN-based datastore, you need to enable an ESXi Advanced Setting that "fakes" SCSI Reservations which is required if you wish to create either a VMFS-based or VSAN Datastore for your Nested ESXi VM. To be clear, this behavior is exactly the same for an on-prem environment using VSAN, the only difference is that customers control the physical ESXi host and can enable this feature where as in VMC, this setting is not enabled.

The implication here is that you would not be able to create a VMFS or VSAN based datastore for your Nested ESXi hosts. The only option at the moment is to setup an NFS Server, which can run as a VM, to provide the Nested ESXi hosts local and/or shared storage.

3) Networking for Nested ESXi

The second caveat is related to the network connectivity for the VMs that you might want to run top of the Nested ESXi hosts. Network connectivity for the Nested ESXi hosts itself functions as expected and this is true whether you have an NSX-V or NSX-T based SDDC in VMC. However, for the VMs that run on top of the Nested ESXi hosts to communicate with each other, you need to ensure that the underlying physical network (VSS or VDS) has both Promiscuous Mode and Forged Transmit enabled OR it leverages the new MAC Learning capabilities which was introduced in vSphere 6.7 and that removes the need for either of those security settings to be enabled.

In VMC, all network portgroup security settings on the physical ESXi hosts are configured to reject and this can not be modified by customers. Further more, the MAC Learning capability is also not available as the VDS version for NSX-V based SDDC is still using version 6.0, but even if it was using the latest VDS version, customers still do not have the ability to change the portgroup settings. In an NSX-T based SDDC, MAC Learning is also not available as it is disabled by default and also can not be enabled.

The implication here is that you would be able to deploy VMs on top of your Nested ESXi hosts, but they would only have network connectivity between each other if they are running on the same Nested ESXi host. If they run on different Nested ESXi host, then they would not be able to communicate with each other. Further more, regardless of which Nested ESXi host the VMs run run on, they would also not have any network connectivity to the external world. Depending on your usage, you may not require any networking for the "inner" VMs, if you do, you might want to consider creating one large Nested ESXi host for testing purposes.

4) Running vCenter Server Appliance in VMC

The third caveat is related to the deployment of the vCenter Server Appliance (VCSA) on VMC in case you wish to manage the Nested ESXi host from within the SDDC. Once you have it running, there are no issues and works just like any other vSphere environment. The issue is specifically with the VCSA UI and CLI Installer which requires you to provide an administrator account when deploying the VCSA. In case you are not aware, customers in VMC are not given full permissions to the entire SDDC but a subset of privileges with the CloudAdmin user. Since this account is not an Administrator role, the VCSA UI and CLI Installer can not be used.

The implication here is that you can still install the VCSA in VMC, but you need to perform some additional steps. The VCSA UI and CLI Installer normally handles both Stage 1 (deployment of the OVA) and Stage 2 (configuration of the VCSA). Instead, you now have to manually or using Automation to deploy the VCSA OVA to the SDDC and once the VCSA is powered on, you then need to connect to the VAMI UI to finish the configuration.

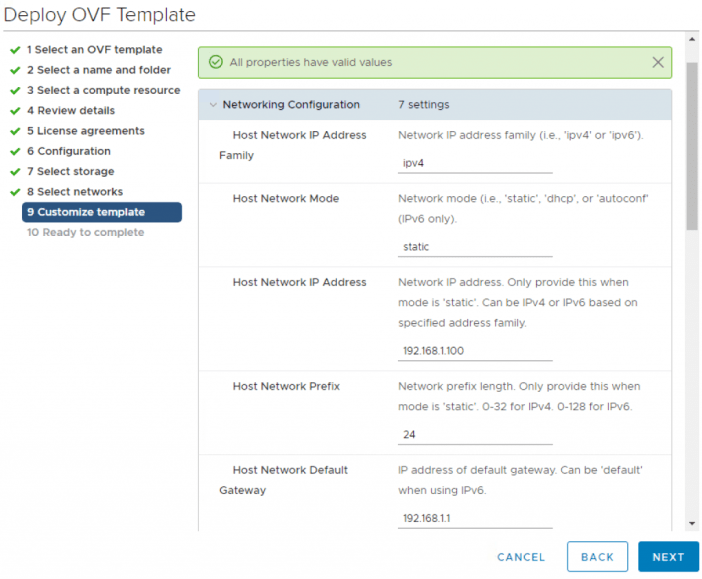

Stage 1 Deployment - The VCSA OVA is located in vcsa directory when you extract the VCSA ISO. You can then import the VCSA OVA using the vSphere UI within VMC SDDC and you will be prompted to specify the deployment type (e.g. Embedded, External VC or PSC) and then provide basic OVF properties which will initially configure the network and credentials of the VM as shown in the screenshots below. Once the OVA has been imported, you can power on the VM

Note: It will take a few minutes for the initial configurations to be applied, so please be patient and do not try to login to the VM or interrupt the process.

Stage 2 Configuration -

Once the VCSA is ready, you should be able to open a browser and connect to the VAMI UI by going to https://[IP-OR-HOSTNAME:5480 and you should see the following screen below. If you do not, it might still be initializing and give it a few more minutes. Next, we need to configure the VCSA, click on the "Set up" icon which will start the wizard you would normally see if you were using the VCSA UI Installer.

One thing to be aware of is that if you did not setup a DNS entry for the IP Address used from the Logical Network (NSX-V or NSX-T), then you need to use the same IP Address for the "System Name" property which represents the FQDN Hostname of the VCSA. In my example, I am simply using the IP Address and I have chosen the value of 192.168.1.100.

After you have filled out the wizard, you can click finish to begin the final configuration. If everything was successful, you should see a success screen and you can now login to the vSphere UI by connecting to either the IP Address or Hostname of your VCSA.

As you can see, you can definitely run a decent Nested ESXi environment within VMC and depending on your use case, you may only need the vSphere layer (ESXi and vCenter Server). For example, if you wanted to build some vSphere Automation using the vSphere API and you simply just need a test environment, then you probably do not care about running workloads. You might want to deploy other VMware products within VMC, just like you would for an on-prem environment for integration testing, this should work as long as you do not require administrative privileges to the VMC SDDC, YMMV as this is not something I have tested. I am curious for folks wanting to run Nested ESXi on VMC, what are some of the reasons for wanting this?

Below is one of my NSX-V based SDDC, I have setup a really basic Nested ESXi environment that contains three Nested 6.7 ESXi hosts which are managed by a VCSA 6.7, also running within VMC. The Nested ESXi hosts are connected to an NFS datastore which is provided by a basic Ubuntu Linux VM that I have running in VMC and as you can see, I have a couple of PhotonOS VMs deployed (running on the same ESXi).

Hi William, thank you for the blog. You stated that Nested ESXi on VMC can be installed manually via ISO. When I try the install it fails to format the install partition presumably due to "fake" SCSI reservations not being enabled on VMC ESXi hosts. Is there a workaround ?

As mentioned in the blog post, the workaround is to use NFS-based solution

Is there any *technical* reason why promiscuous mode cannot be enabled on the required portgroups so that virtual machines running on top of the nested ESXi hosts have a way to communicate with the outside world?

Hi JD,

1) You wouldn't want the traditional Promiscuous Mode to be enabled due to performance impact, please see https://www.williamlam.com/2014/08/new-vmware-fling-to-improve-networkcpu-performance-when-using-promiscuous-mode-for-nested-esxi.html for more details

2) The impact of Prom. Mode has been solved with the MAC Learning capability that was introduced in vSphere 6.7 for traditional Distributed Virtual Switch, please see https://www.williamlam.com/2018/04/native-mac-learning-in-vsphere-6-7-removes-the-need-for-promiscuous-mode-for-nested-esxi.html for more details

3) In VMC, NSX-T is used and hence the switch is not a VDS but rather the N-VDS and it does have the MAC Learning capability but that's configured within NSX-T Manager itself. The issue here is the feature is only configurable via old NSX Manager API but VMC is using the new NSX-T Policy API which currently does not expose that feature. I'm not aware if there are any plans to expose that but if that is of interests, I recommend reaching out to your local VMware account team to share the feedback.

Thank you for the quick reply William, much appreciated! Promiscuous mode is a requirement for the solution we are working to implement. We will look to provide this feedback to our Customer Success Manager.