In case you have not heard the news, VMware had recently published a new knowledge base article (KB 85685) outlining details for the future removal of SD card/USB as a standalone boot device for ESXi.

📣 New VMware KB has just been published on the Removal of SD card/USB as a standalone boot device option for ESXi https://t.co/ci9xLbQIv5

— William Lam (@lamw.bsky.social | @*protected email*) (@lamw) September 16, 2021

If you have not read the KB, please take a few minutes and carefully read the article, especially as you think about future hardware upgrades and purchases.

There has certainly been no shortage of discussions and debates since the publishing of the VMware KB. One topic that I know many of you have been wondering and asking about is what is the impact to vSphere Homelabs? This was something that had already crossed my mind after I first read the KB and I was thinking about this a bit more this week and specifically some of the potential options that are available to customers right now but also some of the considerations you may want to account for in with future homelab upgrades.

Disclaimer: These are my own personal opinions and do not reflect any official guidance or recommendations from VMware.

Use VMFS instead of vSAN

First off, I am a huge fan of vSAN and it is my defacto storage platform for my own personal VMware homelab. With that said, it is not uncommon to find hardware platforms that are used for VMware Homelabs that only have support for two embedded storage devices, either two NVMe devices or a single NVMe + SATA device, such as the popular Intel NUC. Although not ideal, you may want to consider reinstalling ESXi into one of the two storage devices and simply use VMFS instead of vSAN. For shared storage requirements, you could setup a VM that provides either NFS or iSCSI which would then reside on one of the two VMFS volumes, since ESXi and the OS-Data can collocate with a VMFS partition as well.

Boot from SAN

A few folks had asked about boot from SAN and whether it would be a viable option? It definitely is but I initially did not include it as an option since most folks may only have a single host and there are some pre-reqs and infrastructure that needs to be setup. With that said, there are some nifty solutions out there where folks can take advantage of their external storage array that can support booting from an iSCSI LUN.

vSAN backed by USB

Although this has been possible since I first wrote about it back in 2018, I am not currently aware of anyone doing this seriously for their own personal homelab. The interesting thing to note here is that USB is simply being used as the transport but the underlying storage device can be quite reliable such as an M.2 NVMe by leveraging an M.2 to USB enclosure which I have outlined in the blog post. This method is also useful for anyone interested in running vSAN with ESXi-Arm using a Raspberry Pi.

Add additional embedded storage devices

This is much easier said than done, especially if your existing investment was already limited by default, but there are certainly some options. For example, the Supermicro E200-8D or E300-9D, which two popular platforms amongst homelabbers, can be expanded with the use of a PCIe add-on cards (AOC). For the E200-8D, you can use this AOC to give yourself an additional M.2 NVMe slot or for the E300-9D, you can use this AOC to give yourself two additional M.2 NVMe slots. In addition, Supermicro kits also support a SATADOM device, which is a small flash memory device which can then be used for ESXi and its OS-DATA.

In fact, this is what I use for my personal homelab which is comprised of a single E200-8D system. With the use of the SATADOM, you can then use the single embedded M.2 along with the AOC M.2 to construct your vSAN datastore. Depending on your homelab kit, you may be able to add a full / half height PCIe slots to give you additional storage. For the classic Intel NUC (4x4), you are definitely constrained further by space but there are still some options! Read further for more details.

Platforms with more than two storage devices

If you are looking to purchase or upgrade your homelab hardware, you may just want to look at platforms that support more than two embedded storage device. For example, over the past couple of years, Intel has started to introduce more modular form factors in addition to their classic 4x4 design with the release of both the Intel NUC 9 and the recent Intel NUC 11. The NUC 9 can support up to 3 x M.2 NVMe and the NUC 11 can support up to 4 x M.2 NVMe.

In addition to just having more M.2 slots, both the NUC 9 and NUC 11 also includes two additional PCIe slots, which can also be used to add even more storage like this 6 x M.2 to PCIe board! You can certainly get quite creative with all this additional PCIe capacity and it is not limited to just storage, you can add more networking and/or graphics.

Centralize Storage Node/Cluster

With the potential amount of storage that can be packed into a single NUC 9/NUC 11 or any other platform for that matter, folks can also leverage the HCI Mesh with vSAN feature or simply setup iSCSI/NFS and provide centralized storage from a single host while maintaining your existing deployment. This would allow you to migrate your existing workloads and then re-provision the local storage for reinstalling ESXi with the OS-Data.

Storage using Thunderbolt

I know many of you are using the classic Intel NUC (4x4) for your homelab and one concern is that you would no longer be able to use vSAN since it only has space for two embedded storage device (NVMe + SATA). This would mean that one of the storage devices must now be used for the ESXi installation including the OS-Data which prevents the use of vSAN as it requires at least two storage devices.

However, all hope is not lost. Starting with the Intel NUC 8, a Thunderbolt 3 port has been included and can be used to add additional NVMe storage, since Thunderbolt is simply an extension of the PCIe bus. Newer Intel NUC models like the recent Intel NUC 11 also support dual Thunderbolt ports including the latest Thunderbolt 4 specification.

In fact, I wrote an article back in 2019 on some of the different Thunderbolt enclosures that can be used with ESXi to add additional M.2 NVMe devices. As of writing this blog post, the cheapest Thunderbolt 3 single M.2 enclosure is still from OWC called Envoy Express for $79 USD. If you have an Intel NUC Gen 8 or newer, this is probably one of the easiest and cheapest way to leverage your existing hardware investments with minimal changes to your current setup and configuration. Best of all, you can still use vSAN!

Note: I heard from a few folks who had mentioned they were already using the single Thunderbolt port for vSAN networking (10GbE) and would they be out of luck? The answer is no, the nice thing about Thunderbolt is that it can also be chained and although not cheap, you can look into a Thunderbolt 3/4 hub that would allow you to connect multiple Thunderbolt devices behind a single port which can then be seen by ESXi.

NVMe Namespaces

The NVMe specification has an interesting feature called NVMe Namespaces that would allow you to take a single NVMe device and logically break it up into individual devices which can be consumed by an operating system like ESXi. NVMe namespaces is already supported with vSAN and back in 2019, Micron demonstrated this feature by splitting a single 15TB NVMe into 24 separate NVMe namespaces.

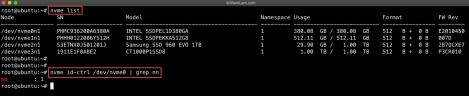

Although this technology sounds like it could potentially solve the challenge of platforms with only one or two NVMe devices, the biggest problem is that almost all consumer NVMe devices do NOT support NVMe Namespaces, especially with the M.2 form factor. NVMe Namespaces is usually found in higher end NVMe devices (U.2 form factor). In fact, I actually booted up an Ubuntu Live USB and using the nvme-cli tool to check all the NVMe devices that I own (Intel, Samsung & Crucial) and as expected, they all just support a single NVMe Namespace which you can identify by looking at the "nn" attribute as shown in the screenshot below.

Additionally, ESXi today also has no way of managing NVMe Namespaces (create or delete), so you can only view and consume NVMe Namespaces, so additional work would need to be done to integrate this natively within the ESXi installation experience.

In the near term, I suspect only a handful of folks may get the benefits of NVMe Namespaces within their homelab and if you do, you probably have a platform that is not constrained to just two NVMe devices. Since the nvme-cli is open source and there are vendor "plugins", it is possible that one of the mentioned vendors could add support for NVMe Namespaces to some of their consumer grade NVMe devices, but it probably will not affect existing NVMe devices you already own. For those interested in learning more about NVMe Namespaces, I found this recent article pretty useful.

Funny. If you recently purchased a fully supported server with an SD card as boot device you're stuck with vSphere 7 for the remainder of the hardware's lifecycle. Unless you go to the expense of adding/replacing storage for the OSDATA which not only carries a pricetag in hardware but also man hours, possibly downtime and what else.

VMware should have made this statement well before the release of vSphere 7, not when they encountered problems caused by shoddy quality control a few updates after releasing a major version.

homelab is personal stuff and while free version exist.. Entreprise user mostly don't use usb or sd. Vsan is quite the reason of existance. But SD and usb do still work and will work fine, as this are made to test system. Not for production based on free license.

I don't know what world you're living in but a lot of enterprise users boot from SD. It's reliable, cheap in hardware and cheap in power consumption. Well, it was reliable until VMware suddenly started with that OSDATA crap where I still fail to see the advantage.

Anyway, I don't mind that VMware made this change. What's mindboggling is that they're now royally buggering their customers who purchased a supported configuration which suddenly will become impossible to upgrade to the next major version of ESXi.

You announce something like this well in advance, not like VMware did here.

Never heard of hardware lifecycles perhaps? Most customers I worked for expect to use servers for at least 5 years and yes, that includes upgrades to at least one major version of the OS if not two. Feels like a cheap Android phone if you can't do that.

For the record, I've known two (2) SD cards to go bad in over 12 years in several thousand servers. One of those SD cards was fried along with the mainboard when the server got hit by a power surge.

TLDR: VMware changed something, they didn't test it, then panicked when they saw the new stuff didn't work, invented some silly explanation noone realy believes and as a result customers are faced with costs and operational issues. Very Well Done.

Tom is correct. 5.x and 6.x have no issues.

I've been screwed enough times in the past by failed USB drives that we stopped buying that way years ago. And for my homelab I've been using SSDs as the boot device. I have a SAN for iSCSI so using up a SATA port doesn't cost me anything.

By the way, the most recent updates for ESXi 7 still don't fix the USB boot drive issue. On my NUCs - hardly enterprise stuff of course - I still get the 'Lost connectivity...' errors.

Hi William, I am looking to create a new Homelab as my Dell T20s are no longer cutting it. In your article, I was really interested in your own homelab solution to have a homelab on a single server, but also use SATADOM to create a vSAN datastore:

"E200-8D system. With the use of the SATADOM, you can then use the single embedded M.2 along with the AOC M.2 to construct your vSAN datastore. Depending on your homelab kit, you may be able to add a full / half height PCIe slots to give you additional storage. For the classic Intel NUC (4x4), "

Would it be possible for you to describe how this is done/configured in a little more detail? Maybe you have blogged on this before - but I can't find it.

Cheers 🙂

https://williamlam.com/2018/11/supermicro-e300-9d-sys-e300-9d-8cn8tp-is-a-nice-esxi-vsan-kit.html

@wodge - scroll down to the storage section. He mentions the addon card which also works for the E200-8D. I can confirm as I use that setup.

Many thanks @forbsy - appreciate your reply and pointing me to the article.

In my E200-8D I actually purchased a SATADOM for ESXi but returned it and grabbed a 800GB SATA SSD instead. That along with an onboard NVME plus the addon NVME gives me 3 great storage devices.

So.. what are all of the "ARM" (Raspberry Pi) users to do?

Only the CM4 can use NVME that is not passed thru the USB.

And it figures, just as I had taken the time to open and modify three USB 3x hubs to use the USB +5 as an enable pin for external 12v to 5v regulators with 5A / hub.

This allows for an external powered hub that does not feed +5 back down the USB and prevent the pi from booting. There goes a bunch of time !!!

I configured the three Node (Expands to 32 Nodes) system expressly as a low cost learning tool for students to learn VMware, VSan Etc..

Is VMware likely to support an M.2 SSD in a USB enclosure as a boot device going forward? I have micro VSAN nodes that have 2 SSDs internally for VSAN and no other option for a boot device.

Unlikely and mainly because there's no standards and consistent way of actually detecting the underlying storage medium

For me I found another solution. I use spare m2 sata SSDs with USB adapter. If you have a lot of space and a free internal USB port like I have in PowerEdge Servers it would be a great solution.

https://www.amazon.de/dp/B07T5D6J81/ref=cm_sw_r_cp_api_glt_i_ZVZHAR1Z939KC2GX9T0H

After reading this article I decide to buy Envoy Express for my Nuc8i7beh. Once received by mail I tried it out immediately with a clean install ESXi 8.0, but no luck. The NVMe drive is not being detected :(. Tried another NVMe (you never know) that was recognized when using it on the mobo slot, but also no luck. Are there any additional drivers needed? (and yes, BIOS has thunderbolt enabled 😉 (one thing left to try is a BIOS update....working on that one)