Several months back I had built an ESXi Virtual Appliance that allows anyone to quickly stand up a fully functional Nested ESXi VM which includes guest customization such as networking, NTP, syslog, passwords, etc. The virtual appliance was initially built for my own personal use as I found myself constantly rebuilding my lab environment for evaluating and breaking new VMware software. I figured if this was useful for myself, it probably could benefit others at VMware and I posted the details internally on our Socialcast forum. Since then, I have received numerous stories on how helpful the ESXi Virtual Appliance has been for both our Field and Engineering for setting up demos, POCs, evaluations, etc.

What is the VMware Client Integration Plugin (CIP)?

If you are a consumer of the vSphere Web Client, you might have seen something called the VMware Client Integration Plugin (CIP) and you may have even downloaded it from bottom of the vSphere Web Client page and installed it on your desktop.

![]()

However, have you ever wondered what CIP is actually used for? I know I personally have even though I have a general idea of what CIP provides, I have always been curious myself about the technical details. Recently there have been a few inquiries internally, so I figure I might as well do some research to see what I can find out.

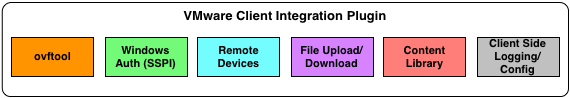

The VMware CIP is actually a collection of different tools that are bundled together into a single installer that is available for either Microsoft Windows or Apple Mac OS X (Linux is being worked on). These tools provide a set of capabilities that are enabled when using the vSphere Web Client and below is a diagram of the different components included in CIP.

- ovftool - Standalone CLI utility used to manage import/export of OVF and OVA images

- Windows Authentication - Allows the use of SSPI when logging in from the vSphere Web Client

- Remote Devices - Connecting client side devices such as a CD-ROM, Floppy, USB, etc. to VM

- File Upload/Download - Datastore file transfer

- Content Library - Operations related to the Content Library feature such as import and export

- Client Side Logging/Config - Allows for writing non-flash logs + vSphere Web Client flash and logging settings

In addition to capabilities shown above, CIP is also used to assist with basic input validation when deploying the vCenter Server Appliance deployment using the new guided UI installer.

Internally, CIP is referred to as the Client Support Daemon or CSD for short. Prior to vSphere 6.0 Update 1, CIP ran as a browser plugin relying on the Netscape Plugin Application Programming Interface (NPAPI). In case you had not heard, Google Chrome and other popular browsers have all recently removed support for NPAPI based plugins in favor of better security and increased speed improvements. Due to this change, CIP had to be re-written to no longer rely on this interface and starting with vSphere 5.5 Update 3a and vSphere 6.0 Update 1, the version of CIP that is installed uses this new implementation. CIP is launched today via a protocol handler which is a fancy term for a capability web browsers that allows you to run a specific program when a link is open.

One observation that some customers have made including myself when installing the CIP is that an SSL Certificate is generated during the installation process. To provide the CIP services to the vSphere Web Client, a secure connection must be made to vSphere Web Client pages. To satisfy this requirement, a self-signed SSL Certificate is used and instead of pre-packaging an already generated certificate, one is dynamically created to ensure that no 3rd Party would have access to the private key and be able to access it from the outside.

The longer term plan is to try to move as much of the CIP functionality onto the server side as possible, although not everything will be able to move to the server side. For things like remote devices, it has already been moved to the Standalone VMRC which already provides access to the VM Console and being able to connect to client side devices makes the most sense. Hopefully this gives you a better understanding of what CIP provides and hint of where it is going in the future.

Here are some additional info that you might find useful when installing and troubleshooting CIP:

CIP Installer Logs:

- Windows -

%ALLUSERSPROFILE%\VMware\CIP\csd\logs

- Mac OS X -

/Applications/VMware Client Integration Plug-in.app/Contents/Library/data/logs

CIP Application Logs:

- Windows -

%USERPROFILE%\AppData\Local\VMware\CIP\csd\logs

- Mac OS X -

$HOME/VMware/CIP/csd/logs

vSphere Web Client / CSD Session Logs:

- Windows -

%USERSPROFILE%\VMware\CIP\ui\sessions

- Mac OS X -

$HOME/VMware/CIP/ui/sessions

CIP SSL Certificate Location:

- Windows -

%ALLUSERSPROFILE%\VMware\CIP\csd\ssl

- Mac OS X -

/Applications/VMware Client Integration Plug-in.app/Contents/Library/data/ssl

How to restrict access to both the Standalone VMRC & HTML5 VM Console?

Several weeks back there were a couple of questions from our field asking about locking down access to a Virtual Machine's Console which includes both the new Standalone VMRC (Windows & Mac OS X) which runs on your desktop as well as the new HTML5 VM Console which runs in the browser. Below is a screenshot of the vSphere Web Client showing how to access the two different types of VM Consoles.

To prevent users from accessing either of the VM Consoles which also applies to the vSphere C# Client, you can leverage vSphere's extensive Role Based Access Control (RBAC) system. The specific privilege that governs whether a user can access the VM Console is under VirtualMachine->Interaction->Console interaction as seen in the screenshot below.

If a user is not granted the following privilege for a particular VM, when they click on either the Standalone VMRC link or the HTML5 VM Console, they will get permission denied and the screen will be blank. Pretty simple if you want to prevent users from accessing the VM Console or allowing only VM Console access when they login.

UPDATE (01/31/17): If you are using VMRC 8.1 or greater, you no longer need the additional permission assignment on the ESXi level if you ONLY want to provide VM Console access, just assign it to the VM. However, if you need to provide device management such as mounting an ISO on the client side, then you will still need to assign VMRC role (along with the required privileges for device management) at the ESXi host level.

UPDATE (12/15/15): If you want to restrict users from having ONLY VM Console access which may include the Standalone VMRC, you will need to ensure that the user has the role applied not only on the VMs you wish to restrict but also at the ESXi host level since Standalone VMRC still requires access to ESXi host. You do not need to grant read-only permissions for the user at the ESXi level, but you just need to assign the user "VMRC" only role at the ESXi level or higher to ensure they can connect to the VMRC.

- « Previous Page

- 1

- …

- 336

- 337

- 338

- 339

- 340

- …

- 567

- Next Page »