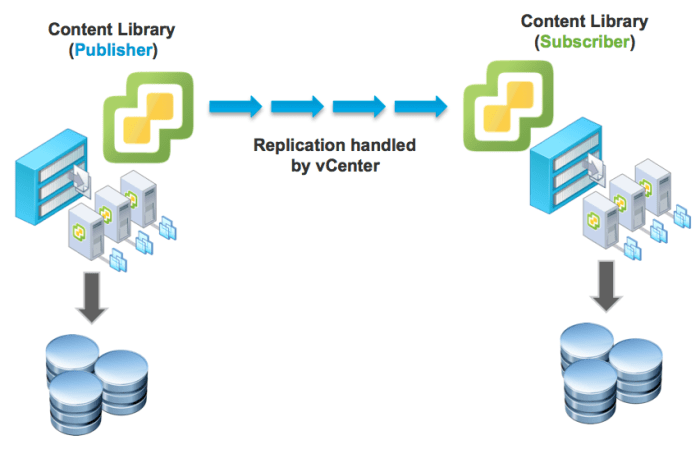

As the adoption of vSphere Content Library continues to grow, I am seeing more questions from our field and customers around content distribution. In case you did not know, vSphere Content Library (CL as I will be refering to it going forward) has its own built-in native replication mechanism which allows customers to easily publish and subscribe to libraries from either within a single vCenter Server instance or even between two completely different vCenter Servers (regardless of deployment topology and/or SSO Domain configurations).

Content distribution or replication is handled by CL which is a service within the vCenter Server. If content is being replicated from within a single vCenter Server and the ESXi hosts can communicate with each other, then direct host to host transfer is used, also referred to as Network File Copy (NFC), rather than going through vCenter Server. When content is transfered between two vCenter Servers, then the data travels through vCenter Server using standard HTTPS (443) by default. In the latter scenario, if you have configured Enhanced Linked Mode for your vCenter Servers, then NFC will be used unless ESXi hosts can not communicate with each other than, it will automatically fall back to the default HTTPS which is pretty cool.

One thing that may not be very well known is that customers actually have a choice in how their CL content is replicated. In addition to native replication which currently does not support incremental/delta updates, meaning all file transfers are full copies, CL can also support external replication. In fact, many customers today already have existing methods for efficiently replicating large amounts of data across multiple datacenters whether that is replication built into their storage arrays, network appliances or some other means. For these customers, you can still benefit from CL while continue to take advantage of your existing methods of replication.