The much anticipated release of vSphere 6.5 has officially GA'ed and you can find all the release notes and downloads over here. Just like prior releases, I have created a new Nested ESXi Virtual Appliance to aide in quickly setting up a vSphere 6.5 environment for both educational as well as lab purposes. If you have not used this Virtual Appliance before, I strongly recommend you thoroughly review this blog post here for the background before proceeding further.

Disclaimer: Nested ESXi and Nested Virtualization is not officially supported by VMware, please use this at your own risk (the usual).

The new ESXi 6.5 Virtual Appliance includes the following configuration:

- ESXi 6.5 OS [New]

- GuestType: ESXi 6.5[New]

- vHW 11 [New]

- 2 vCPU

- 6GB vMEM

- 2 x VMXNET vNIC

- 1 x PVSCSI Adapter [New]

- 1 x 2GB HDD (ESXi Installation)

- 1 x 4GB SSD (for use w/VSAN, empty by default)

- 1 x 8GB SSD (for use w/VSAN, empty by default)

- VHV added (more info here)

- dvFilter Mac Learn VMX params added (more info here)

- disk.enableUUID VMX param added

- VSAN traffic tagged on vmk0

- Disabled VSAN device monitoring for home labs (more info here)

- VMFS6 will be used if user selects to create VMFS volume [New]

- Enabled sparse swap (more info here) [New]

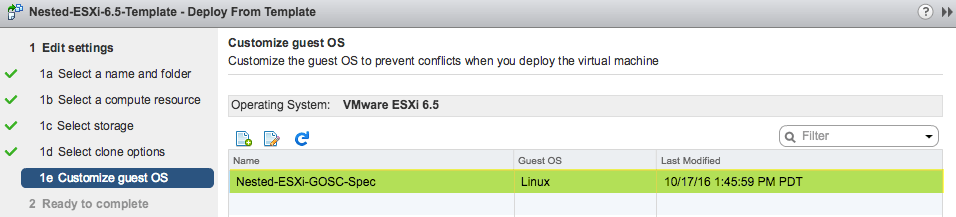

To be able to import and use this new ESXi VA, you will need to be running at least vSphere 6.0 Update 2 in your environment as I take advantage of some of the new Nested ESXi enhancements in vSphere 6.5. If you need to run ESXi 6.5 on earlier versions of vSphere, then you can take my existing 5.5 or 6.0 VAs and manually upgrade to 6.5.

Now that you made it this far, here is download: Nested_ESXi6.5d_Appliance_Template_v1.ova

Lastly, I have also spent some time building some new automation scripts which takes advantage of my Nested ESXi VAs and deploys a fully functional vSphere lab environment without even breaking a sweat. Below is a little sneak peak at what you can expect 😀 Watch the blog for more details!