As many of you have probably heard by now, vSphere 6.5 was just announced at VMworld Barcelona this week and it is packed with a ton of new features and capabilities which you can read more about here. One area that is near and dear to me and has not been covered are some of the Nested ESXi enhancements that have been made in vSphere 6.5.

To be clear, VMware has NOT changed its support stance in vSphere 6.5. Both Nested ESXi as well as general Nested Virtualization is still NOT officially supported. Okay, so thats out of the way now, lets see what is new?

- Paravirtual SCSI (PVSCSI) support

- GuestOS Customization

- Pre-vSphere 6.5 enablement on vSphere 6.0 Update 2

- Virtual NVMe support

Lets take a closer look at each of these enhancements.

Paravirtual SCSI (PVSCSI) support

When vSphere 6.0 Update 2 was released, I had hinted that PVSCSI support might be a possibility in the near future. I am happy to announce that this is now possible with running Nested ESXi and having the guestOS as ESXi 6.5. In vSphere 6.5, a new GuestOS type has been introduced called vmkernel65 which is optimized for running ESXi 6.5 in a VM as shown in the screenshot below.

As you can see from the VM Virtual Hardware configuration screen below, both the PVSCSI and VMXNET3 adapter are now the recommended default when creating a Nested ESXi VM for running ESXi 6.5.

Similiar to the VMXNET3 driver, the PVSCSI driver is automatically bundled within the version of VMware Tools for Nested ESXi. That is to say, the drivers are included in the default ESXi image itself and is ONLY activated when ESXi detects that it is running inside of a VM. From a user standpoint, this means there are no additional configurations or installations that is required. You simply select ESXi 6.5 as the GuestOS and install ESXi as you normally would and this will automatically be enabled for you.

The only requirement to leverage this new capability is that BOTH the GuestOS type is ESXi 6.5 (vmkernel65) and the actual OS is running ESXi 6.5. The underlying physical ESXi host can either be ESXi 6.0 or ESXi 6.5. In addition to new virtual hardware defaults, I have also found that the new ESXi 6.5 GuestOS type now uses EFI firmware over the legacy BIOS compared to previous ESXi 6.x/5.x/4.x GuestOS types.

For customers who wish to push their storage IO a bit more for Nested ESXi guests, this is a great addition, especially with lower overhead when using a PVSCSI adapter.

GuestOS Customization

One of the very last capability that has been missing from Nested ESXi is the ability to perform a simple GuestOS customization when cloning or deploying a VM from template running Nested ESXi. Today, you can deploy my Nested ESXi Virtual Appliance which basically provides you with the ability to customize your deployment but would it not be great if this was native in the platform? Well, I am pleased to say this is now possible!

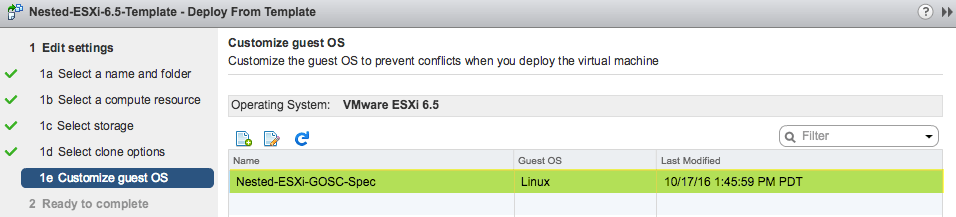

When you go and clone or deploy a VM from template that is a Nested ESXi VM, you will now have the option to select the Customize guest OS option. As you can see from the screenshot below, you can now create a new Customization Spec which is based on the Linux customization spec. The customization only covers networking configuration (IP Address, Netmask, Gateway and Hostname) and only applies it to the first VMkernel interface, all others will be ignored. The thinking here is that once you have your Nested ESXi VM on the network, you can then fully manage and configure it using the vSphere API rather than re-creating the same functionality just for cloning.

To use this new Nested ESXi GuestOS customization, there are two things you will need to do:

- Perform two configuration changes within the Nested ESXi VM which will prepare them for cloning. You can find the configuration changes described in my blog post here

- Ensure BOTH the GuestOS type is ESXi 6.5 (vmkernel65) and the actual OS is running ESXi 6.5. This means that your underlying physical vSphere infrastructure can be running either vSphere 6.0 Update 2 or vSphere 6.5

You can monitor the progress of the guest customization by going to the VM's Monitor->Tasks & Events using the vSphere Web Client or vSphere API if you are doing this programmatically. Below is a screenshot of a successful Nested ESXi guest customization. If there are any errors, you can take a look at /var/log/vmware-imc/toolsDeployPkg.log within the cloned Nested ESXi VM to determine what went wrong.

I know this will be a very welcome capability for customers who extensively use the guest customization feature or if you just want to quickly clone an existing Nested ESXi VM that you have already configured.

Pre-vSphere 6.5 enablement in vSphere 6.0 Update 2

By now, you probably have figured out what this last enhancement is all about 🙂 It is exactly as it sounds, we are enabling customers to try out ESXi 6.5 by running it as a Nested ESXi VM on your existing vSphere 6.0 environment and specifically the Update 2 release (this includes both vCenter Server as well as ESXi). Although this has always been possible with past releases of vSphere running newer versions, we are now pre-enabling ESXi 6.5 specific Nested ESXi capabilities in the latest release of vSphere 6.0 Update 2. This means when vSphere 6.5 is generally available, you will be able to test drive some of the new Nested ESXi 6.5 capabilities that I had mentioned on your existing vSphere infrastructure. This is pretty darn cool if you ask me!?

Virtual NVMe support

I had a few folks ask on whether the upcoming Virtual NVMe capability in vSphere 6.5 would be possible with Nested ESXi and the answer is yes. Please have a look at this post here for more details.

For those of you who use Nested ESXi, hopefully you will enjoy these new updates! As always, if you have any feedback or requests, feel free to leave them on my blog and I will be sure to share it with the Engineering team and who knows, it might show up in the future just like some of these updates which have been requested from customers in the past 😀