In vSphere 6.0, you can now easily import your vCenter Server's trusted root CA certificate onto your client desktop by simply downloading it from the vCenter Server's landing page as shown in the screenshot below. Michael White had also recently wrote about this topic here which includes a step by step walk through.

Several weeks back I was working on an internal project which required the vCenter Server's root certificate. I was already aware of this interface and had written a quick and dirty script to automate the process of downloading and importing the certificate to the system I was working on. To be honest, I did not think much of the script after I wrote it. It was just recently that Alan Renouf, who was also involved in the project mentioned that it might be worth sharing the script as others might also find it useful. I thought that was a good idea and re-factored the code a bit since it was being used in a slightly different context. While doing so, I also created an equivalent PowerShell sample since the original script was meant to run on either a Mac OS X or Linux platform.

With that, I have created a simple shell script called import-vcrootcertificate.sh which can run on either Mac OS X or Linux system and a PowerShell script called Import-VCRootCertificate.ps1

Both scripts are pretty easy to use, they accept a single command-line argument which is the Hostname/IP Address of the vCenter Server that you wish to import the root certificate from. Both scripts ere able to detect if the vCenter Server is Windows or the VCSA since they have a slightly different URL to the root certificate before performing the import. Since the script will need access to your certificate store, you will need to run the scripts using a privileged account.

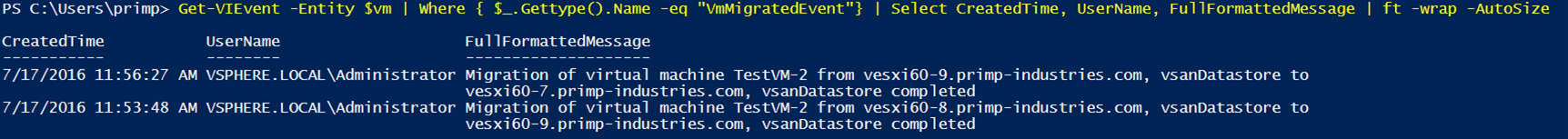

Here is a screenshot of running the PowerShell script: