I am a huge fan of HashiCorp Packer, which makes automating Virtual Machine images for vSphere including OVF, OVA and vSphere Content Library Templates extremely easy. Packer supports two vSphere Providers, the first being vmware-iso which requires SSH access to an ESXi host and the second called vsphere-iso which does not require ESXi access but instead connects to vCenter Server using the vSphere API, which is the preferred method for vSphere Automation.

I started working with Packer and the vmware-iso several years ago and because there is not 100% parity between the two vSphere providers, I have not really looked at the vsphere-iso provider or even attempted to transition over. I was recently working on some automation within my VMware Cloud on AWS(VMConAWS) SDDC and since this is a VMware managed service, customers do not have access to the underlying ESXi hosts nor SSH access. I thought this would be a good time to explore the vsphere-iso provider and see if I can make it work in a couple of different networking scenarios.

For customers that normally establish either a Direct Connect (DX) or VPN (Policy or Route-based) from their on-premises environment to their SDDC, there is nothing special that needs to be setup to use Packer. However, if you are like me who may not always have these types of connectivity setup or if you wish to use Packer directly over the internet to your SDDC, then some additional configurations will be needed.

UPDATE (04/12/22) - A floppy option can now be used with Photon OS to host the kickstart file, see this Github issue for an example.

Packer Connectivity Scenarios

In both scenarios below, DX/VPN is not configure or relied upon to the VMConAWS SDDC.

Scenario 1 - Run Packer locally on your desktop and connect to the VMConAWS SDDC over the public internet. Because the SDDC will not be able to communicate with your system running Packer, required configuration files can not be served over Packer's HTTP server nor can you use any of the communicators which also expects the ability to SSH to the provisioned VM using the internal IPs that have been allocated.

Scenario 2 - Run Packer in a Linux bastion (jumphost) VM that runs within the VMConAWS SDDC and configure the SDDC firewall rule to allow connectivity from the VM to/from the SDDC Management CIDR. This will enable you to use Packer's built-in web server to serve up both the kickstart configuration files to the VM being created but also use SSH communicator for additional post-provisioning automation.

To help demonstrate a solution for both of these scenarios, I have created a Github repo called vmc-packer-example which contains an example for both Ubuntu and PhotonOS. The Ubuntu example relies on a guestOS that supports a floppy device which is how it retrieves the kickstart configuration file and can be a solution for Scenario 1. The PhotonOS example relies on Packer's built-in HTTP server to serve up the kickstart configuration and this can be a solution for Scenario 2.

Note: I was hoping PhotonOS could also read a kickstart file from a floppy device but it looks like PhotonOS 3.0 does not see the device and although PhotonOS 4.0 seems to have improved and lists the device, it is not automatically mounted and hence it can not be used. There has been several requests from the community to add this support and I have shared my latest findings in this latest Github PR.

Packer Examples

To use either the Ubuntu or Photon example, simply clone the Github repo and update the respective Packer JSON configuration file with your environment configuration. In addition, you will also need to download and upload the respective ISO files to your SDDC or another version based on your needs.

Once you have saved your configuration, simply run the following command to start the packer build process:

packer build [JSON-CONFIGURATION]

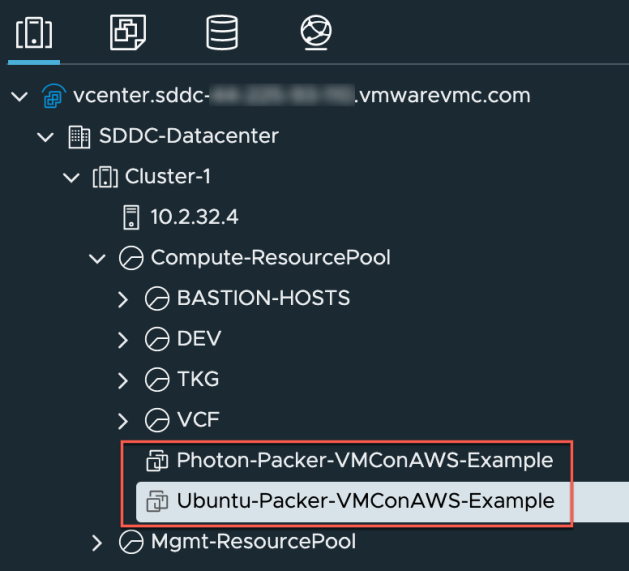

If everything was configured correctly, you should see the newly build VM in your SDDC Inventory as shown in the screenshot below.

I would be remiss if I did not mention Ryan Johnson's amazing vSphere Packer Repository which includes complete working templates for automating Microsoft Windows Server, Redhat Linux, CentOS and PhotonOS, definitely check it out as it is a great resource for using Packer's vsphere-iso provider.

Really good article, I've been doing this for a while, windows I've found to be far more complex for post deployment for customization and essential requirement for the floppy to mount for the kicker and customization scripts.

Proxmox for packer I have managed to get those customization scripts to work via iso mount, wonder if that would work on vsphere.