Supermicro kits such as the E200-8D is a very popular platform amongst the VMware community and with powerful Xeon-based CPUs and support for up to 128GB of memory, it is perfect for running a killer vSphere/vSAN setup!

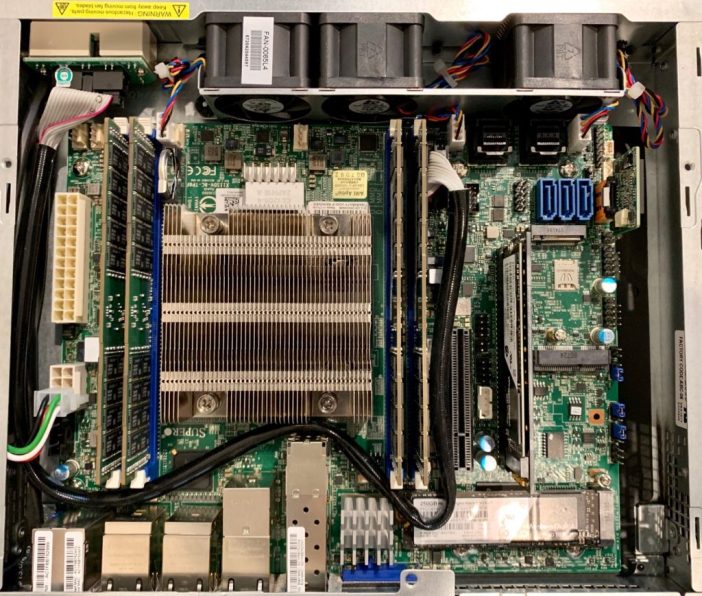

Earlier this Fall, Supermicro released a "big daddy" version to the E200-8D, dubbed E300-9D and specifically, I want to focus on the 8-Core model (SYS-E300-9D-8CNTP) as this system actually listed on the VMware HCL for ESXi! The E300-9D can support up to half a terabyte of memory and with the 8-Core model, you have access to 16 threads. The E200-8D is also a supported platform by VMware, you can find the VMware HCL listing here.

I was very fortunate to get my hands on a loaner E300-9D (8-Core) unit, thanks to Eric and his team at MITXPC, a local bay area shop specializing in embedded solutions. In fact, they even provided a nice vGhetto promo discount code for my readers awhile back, so definitely check it out if you are in the market for a new lab. As an aside, when doing a quick search online, they also seem to be the only ones actually selling the E300-9D (8-Core) system which you can find here and in general, they seem to be priced fairly competitively. This is not an endorsement for MITXPC, but recommend folks to compare all prices when shopping online, especially as today is Black Friday in the US and Cyber Monday is just a few days away.